AI for Speech Therapy and Language Acquisition

AI for Speech Therapy and Language Acquisition

This is a blog post on our paper regarding correcting mispronunciations in speech that was accepted for publication at Interspeech 2022. This was the work of Talia Ben Simon and Felix Kreuk together with Yaki Cohen and Faten Awwad from Rambam Medical Center.

Learning a new language involves constantly comparing speech productions with reference productions from the environment. Early in speech acquisition, children make articulatory adjustments to match their caregivers' speech. Grownup learners of a language tweak their speech to match the tutor reference.

Can we build a system that gives almost perfect auditory and visual feedback to learners of a new language? To those who suffer from Speech Sound Disorders (SSD) and need to undergo Speech Therapy?

Learning a language involves constantly comparing one's own speech production to reference examples given by the speakers of the language. Children at the early stages of language acquisition make articulatory adjustments to match better the reference models, and grownups learning a new language adjust their speech so as it becomes more similar to their teachers.

Children and adults suffering from SSD have persistent difficulty pronouncing words or sounds correctly. They are often referred to speech therapy, where the work is focused on learning the motor skills of sound production by providing feedback in the form of the correct production. The most critical part of the treatment is the feedback, which helps develop a proper model of pronunciation.

We propose an algorithm to generate synthetic feedback of proper pronunciation from the wrong pronunciation in the speaker's own voice. Assume, for example, that Ron has a problem pronouncing /r/ in American English. It can be because he suffers from SSD or since his native language is Greek and he is trying to learn English. The system will ask Ron to pronounce the word "right." Ron will pronounce something like "white," mispronouncing /r/ with /w/. The system will say to Ron: "Ron, you pronounced white, but you should have pronounced right," where the world right will be synthesized in Ron's own voice. Ron will be able to compare his production with the desired production and try to fix it.

We propose to automatically synthesize a waveform with proper pronunciation generated from the waveform of wrong pronunciation, which will serve as a reference or feedback for the speaker. The generated speech should maintain the original voice of the speaker, as it was found to be a critical factor in providing effective feedback (Strömbergsson et al., 2014).

Our model

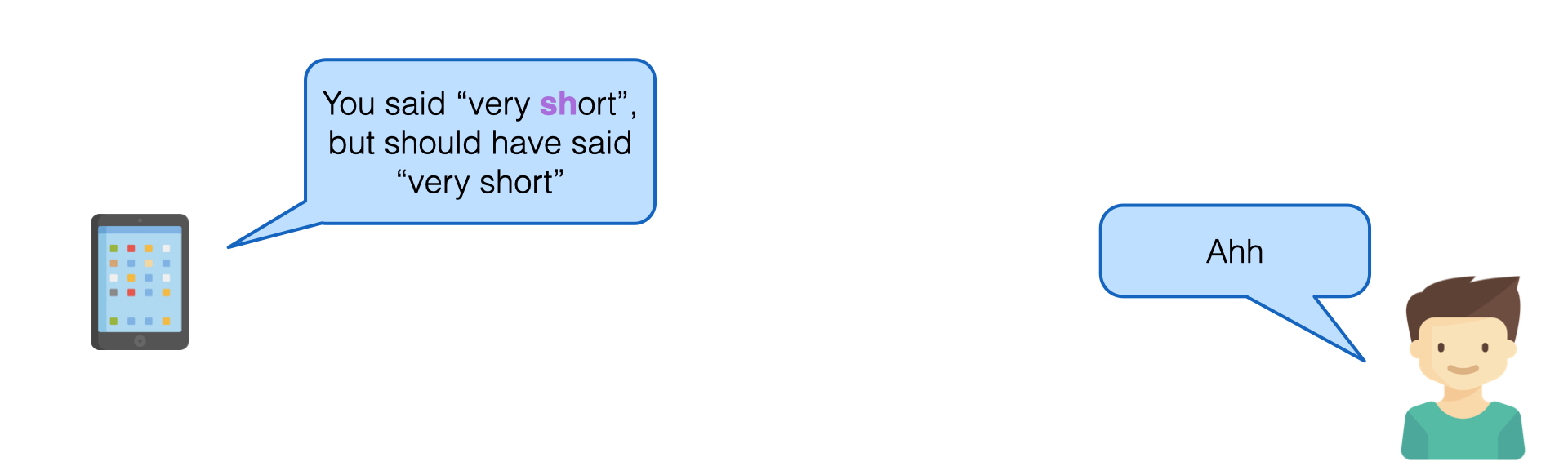

The mobile app ask for the user to pronounce a word or a phrase.

The user misproduced /sh/, and the recorder speech is sounded like this:

The speech is converted to spectrogram. First a phoneme-to-speech alignment is done (forced alignment).

The misproduced phoneme is located and removed with its close neighborhood.

A new speech spectrogram is generated from the processed spectrogram with the missing phoneme. The Generator is a deep neural network trained to synthesize speech work similarly to inpainting of images.

The newly generated spectrogram is converted to a waveform using the HiFi-GAN vocoder. It is played back to the user. The newly synthesized speech maintains the users original voice, but with the corrected phoneme /sh/. It is sounded like this:

Why is it exciting? For the very first time, we can take a misproducted voice and correct it. Not only that, the proposed algorithm does not need to be trained on parallel data. That is, the training algorithm does not need to hear a speaker pronounces incorrectly and correctly the same phrase. Last, the algorithm is trained on “healthy” speech and does not need to be adapted to a new user.

Enabling AI in learning new languages and speech therapy might have an enormous effect on the process’s effectiveness and user engagement. Moreover, it might introduce a new way of helping those suffering from SSD in rural areas and African countries.

You can read more here:

Talia ben Simon, Felix Kreuk, Faten Awwad, Jacob T. Cohen, Joseph Keshet, Correcting Misproducted Speech using Spectrogram Inpainting, The 23rd Annual Conference of the International Speech Communication Association (Interspeech), Incheon, Korea, 2022.